LeNet - Traffic Signs Classification

Code

[Notice] download here

Observing the dataset

Classes are as listed below:

- ( 0, b’Speed limit (20km/h)’) ( 1, b’Speed limit (30km/h)’)

- ( 2, b’Speed limit (50km/h)’) ( 3, b’Speed limit (60km/h)’)

- ( 4, b’Speed limit (70km/h)’) ( 5, b’Speed limit (80km/h)’)

- ( 6, b’End of speed limit (80km/h)’) ( 7, b’Speed limit (100km/h)’)

- ( 8, b’Speed limit (120km/h)’) ( 9, b’No passing’)

- (10, b’No passing for vehicles over 3.5 metric tons’)

- (11, b’Right-of-way at the next intersection’) (12, b’Priority road’)

- (13, b’Yield’) (14, b’Stop’) (15, b’No vehicles’)

- (16, b’Vehicles over 3.5 metric tons prohibited’) (17, b’No entry’)

- (18, b’General caution’) (19, b’Dangerous curve to the left’)

- (20, b’Dangerous curve to the right’) (21, b’Double curve’)

- (22, b’Bumpy road’) (23, b’Slippery road’)

- (24, b’Road narrows on the right’) (25, b’Road work’)

- (26, b’Traffic signals’) (27, b’Pedestrians’) (28, b’Children crossing’)

- (29, b’Bicycles crossing’) (30, b’Beware of ice/snow’)

- (31, b’Wild animals crossing’)

- (32, b’End of all speed and passing limits’) (33, b’Turn right ahead’)

- (34, b’Turn left ahead’) (35, b’Ahead only’) (36, b’Go straight or right’)

- (37, b’Go straight or left’) (38, b’Keep right’) (39, b’Keep left’)

- (40, b’Roundabout mandatory’) (41, b’End of no passing’)

- (42, b’End of no passing by vehicles over 3.5 metric tons’)

The network used is called Le-Net that was presented by Yann LeCun

Learning Goals

- Le-Net이라는 심층 신경망을 사용하여 교통 표지판 분류 작업을 수행한다. Traffic signs classification using Le-Net, a deep neural network

- 자율 주행 자동차 분야에서 특히 각광받는 분야로 카메라를 통해 물체 감지 및 교통 표지판 인식하여 적절한 자동차 수행처리를 이행해야 한다. A rising field of study in self-driving, expecting the camera to identify objects and traffic signs.

-

시그모이드 ReLU와 같은 활성화 함수에 대해 이해한다 Understanding activation functions like ReLU, Sigmoid, etc.

-

케라스 API로 심층 합성곱 신견망을 설계하고 분류 성능 개선을 위해 신경망 구조를 최적화한다. Building deep CNN using Keras API and optimizing the network for better classification

-

교차 검증(Cross Validation)을 이해하고 신경망 과적합 방지 적용 방법에 대해 이해한다. Understanding cross-validation and how to avoid overfitting

- 혼동, 행렬 및 분류 보고서로 모델 평가 및 결과 제시하는 법을 배운다 Model evaluation using confusion matrix and classification report

- precision & recall

Le-Net(Hide/Show)

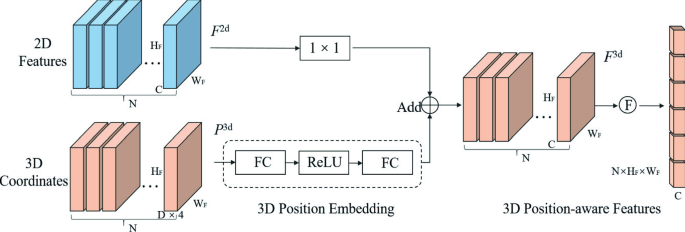

- STEP 1: THE FIRST CONVOLUTIONAL LAYER #1

- Input = 32x32x1

- Output = 28x28x6

- Output = (Input-filter+1)/Stride* => (32-5+1)/1=28

- Used a 5x5 Filter with input depth of 3 and output depth of 6

- Apply a RELU Activation function to the output

-

pooling for input, Input = 28x28x6 and Output = 14x14x6

- Stride is the amount by which the kernel is shifted when the kernel is passed over the image.

- STEP 2: THE SECOND CONVOLUTIONAL LAYER #2

- Input = 14x14x6

- Output = 10x10x16

- Layer 2: Convolutional layer with Output = 10x10x16

- Output = (Input-filter+1)/strides => 10 = 14-5+1/1

- Apply a RELU Activation function to the output

- Pooling with Input = 10x10x16 and Output = 5x5x16

- STEP 3: FLATTENING THE NETWORK

- Flatten the network with Input = 5x5x16 and Output = 400

- STEP 4: FULLY CONNECTED LAYER

- Layer 3: Fully Connected layer with Input = 400 and Output = 120

- Apply a RELU Activation function to the output

- STEP 5: ANOTHER FULLY CONNECTED LAYER

- Layer 4: Fully Connected Layer with Input = 120 and Output = 84

- Apply a RELU Activation function to the output

- STEP 6: FULLY CONNECTED LAYER

- Layer 5: Fully Connected layer with Input = 84 and Output = 43

Loading the dataset

import pickle # Serialize the data

import seaborn as sns

import pandas as pd # Import Pandas for data manipulation using dataframes

import numpy as np # Import Numpy for data statistical analysis

import matplotlib.pyplot as plt # Import matplotlib for data visualisation

import random

데이터 직렬화(Serialization): 객체에 저장된 데이터를 스트림에 쓰기위해 연속적인 데이터를 변환하는것 the process of converting an object into a stream of bytes

# Loading the dataset

with open("./traffic-signs-data/train.p", mode='rb') as training_data:

train = pickle.load(training_data)

with open("./traffic-signs-data/valid.p", mode='rb') as validation_data:

valid = pickle.load(validation_data)

with open("./traffic-signs-data/test.p", mode='rb') as testing_data:

test = pickle.load(testing_data)

X_train, y_train = train['features'], train['labels']

X_validation, y_validation = valid['features'], valid['labels']

X_test, y_test = test['features'], test['labels']

X_train.shape

(34799, 32, 32, 3)

X_train.shape

(34799,)

i = 1001

plt.imshow(X_train[i]) # show the image

y_train[i]

36

상기 결과에서 해당 이미지의 클래스 인덱스는 36으로, 이는 ‘Go straight or right’에 해당된다. The result shows the image has its index , which corresponds to ‘Go straight or right’

Image Preprocessing

## Shuffle the dataset

from sklearn.utils import shuffle

X_train, y_train = shuffle(X_train, y_train)

상기 코드에서 이미지 순서에 기반한 과적합을 방지하고자 이미지 셔플을 전처리 과정에서 해줘야 한다. Shuffling the image dataset to avoid overfitting by order of images

그렇지 않으면, 모델이 매번 같은 순서의 이미지들을 학습하여 그 이미지들에 대한 과대 학습이 이루어질 것이다. Otherwise, the model will inevitably face overfitting

# Color image--> dark image (integrating into one RGB channel)

X_train_gray = np.sum(X_train/3, axis=3, keepdims=True) # 실제 이미지 차원을 그대로 유지 Keep the image's dimension

X_test_gray = np.sum(X_test/3, axis=3, keepdims=True)

X_validation_gray = np.sum(X_validation/3, axis=3, keepdims=True)

색상때문에 분류가 헷갈리지 않게 하기위해서 RGB를 흑백으로 통일한다. Integrating into one RGB channel (dark)

np.sum()

- [axis](https://stackoverflow.com/questions/51628437/compute-the-sum-of-the-red-green-and-blue-channels-using-python): 특정 축을 기준으로만 합계를 구하기 summation based on certain axis

- i.e, (34799, 32, 32, 3) –> axis=2 <=> 32, axis=3 <=> 3. Thus, ‘axis=3’ performs summation based on RGB

- keepdims: 차원 유지하여 합계 구하기 Find the sum, keeping the dimension

- 기존 차원이 n일 때, axis는 n-1의 차원을 배출한다. 따라서, keepdims=True를 통하여 n 차원을 배출하게 한다. If original dimension is n, axis will produce n-1 dimensions. ‘keepdims=True’ makes it possible to produce n dimensions

RGB는 3개의 채널로 구성되어 있으므로; 이미지 데이터의 RGB 값들을 총 합한 다음 3으로 나누면 색깔이 제거된다. RGB consists of three channels; summing all RGB values then dividing the sum by 3

# normalization

X_train_gray_norm = (X_train_gray - 128)/128

X_test_gray_norm = (X_test_gray - 128)/128

X_validation_gray_norm = (X_validation_gray - 128)/128

왜 ‘128’이라는 숫자로 정규화하는걸까? Why normalizing the dataset with 128?

일반적으로 정규화에 128 혹은 256 사용 多 Generally using 128 or 256 as a normalizing parameter

# data visualization

i = 610

plt.imshow(X_train_gray[i].squeeze(), cmap='gray')

plt.figure()

plt.imshow(X_train[i])

[dark]

[original]

squeeze: [[1, 2, 3, 4]] –> [1, 2, 3, 4]

Training the dataset

# Loading the dataset

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D, AveragePooling2D, Dense, Flatten, Dropout

from keras.optimizers import Adam

from keras.callbacks import TensorBoard

from sklearn.model_selection import train_test_split

# Building the model

cnn_model = Sequential()

cnn_model.add(Conv2D(filters=6, kernel_size=(5, 5), activation='relu', input_shape=(32,32,1)))

cnn_model.add(AveragePooling2D())

cnn_model.add(Conv2D(filters=16, kernel_size=(5, 5), activation='relu'))

cnn_model.add(AveragePooling2D())

cnn_model.add(Flatten())

cnn_model.add(Dense(units=120, activation='relu'))

cnn_model.add(Dense(units=84, activation='relu'))

cnn_model.add(Dense(units=43, activation = 'softmax'))

# Training the model

cnn_model.compile(loss ='sparse_categorical_crossentropy', optimizer=Adam(lr=0.001),metrics =['accuracy'])

compile()

- sparse_categorical_crossentropy: 손실 범주형 교차엔트로피로 분류에 사용된다. used for classification

- 클래스가 두 개밖에 없다면 이진 교차엔트로피(‘binary_categorical_crossentropy’)를 사용한다. ‘binary_categorical_crossentropy’ is used for binary tasks

- Adam

- lr: 학습률 (learning rate)

history = cnn_model.fit(X_train_gray_norm,

y_train,

batch_size=500,

nb_epoch=50,

verbose=1,

validation_data = (X_validation_gray_norm,y_validation)) # Adding validation set, test set

Epoch 50/50

34799/34799 [==============================] - 13s 364us/step - loss: 0.0255 - acc: 0.9951 - val_loss: 0.7429 - val_acc: 0.8624

Evaluating the model performance

score = cnn_model.evaluate(X_test_gray_norm, y_test,verbose=0)

print('Test Accuracy : {:.4f}'.format(score[1]))

Test Accuracy : 0.8611

history.history.keys()

dict_keys(['val_loss', 'val_acc', 'loss', 'acc'])

# Accuracy distribution

accuracy = history.history['acc']

val_accuracy = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(accuracy))

plt.plot(epochs, accuracy, 'bo', label='Training Accuracy') # 'bo': 파란 X, Y 점 blue X, Y points

plt.plot(epochs, val_accuracy, 'b', label='Validation Accuracy') # 'b': 파란선 blue lines

plt.title('Training and Validation accuracy')

plt.legend()

# loss distribution

plt.plot(epochs, loss, 'ro', label='Training Loss')

plt.plot(epochs, val_loss, 'r', label='Validation Loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

Prediction

# get the predictions for the test data

predicted_classes = cnn_model.predict_classes(X_test_gray_norm)

# predicted vs. actual

from sklearn.metrics import confusion_matrix

cm = confusion_matrix(y_test, predicted_classes)

plt.figure(figsize = (25,25))

sns.heatmap(cm, annot=True)

혼동행령에서 대각선이 아닌 위치에 속한 수치값들은 오분류 개수이다. The elements not on the diagonal line represent the number of errors

예측값을 만들어냈으니, 실제값들과 비교하기 위해 7x7 그리드에 49개의 랜덤 이미지를 나열해서 확인해보자. Let’s lay out the 7x7 grid of randomly generated images so that we can intuitively compare estimates with answers

L = 7

W = 7

fig, axes = plt.subplots(L, W, figsize = (12,12))

axes = axes.ravel() # flatten the array

for i in np.arange(0, L * W):

axes[i].imshow(X_test[i])

axes[i].set_title("Prediction={}\n True={}".format(predicted_classes[i], y_true[i]))

axes[i].axis('off') # remove axis

plt.subplots_adjust(wspace=1) # space out images

댓글남기기