[논문분석] Weaker Than You Think: A Critical Look at Weakly Supervised Learning (ACL 2023)

한줄요약 ✔

- Weakly-supervised Learning은 주로 noisy labels가 많다.

- 여전히 fully-supervised model 보다 성능이 안좋은 이유.

- 기존 WS 방법들은 과장되었다 .

- Clean validation samples:

- Correct labels를 가진 데이터로 검증하는 것; Early stopping, meta learning 등의 이유로 사용.

- 차라리 이것을 training dataset에 포함시켰으면 성능이 더 좋게 나오더라 .

- 이것을 training data로 사용하여 fine-tuning한 모델이 이를 validation set으로 활용한 최신 WSL 모델보다 성능이 좋더라.. (Figure 1)

- WSL 모델들은 단순 weak labels 보다는 성능 좋긴함..

- 이것을 training data로 사용하여 fine-tuning한 모델이 이를 validation set으로 활용한 최신 WSL 모델보다 성능이 좋더라.. (Figure 1)

- Clean validation samples:

- 기존 모델은 단순히 linguistic correlation이 비슷한 것끼리 엮이도록 학습하였는데 이것은 편향때문에 일반화 성능 저하될 수 있다 .

- 긍정적인 것에 부정적인 레이블을 강제하여 further tuning → 일반화 성능 향상 .

- Contributions:

- Validation samples를 training data로써 활용하여 fine-tuning.

- Revisiting the true benefits of WSL.

- Validation samples를 training data로써 활용하여 fine-tuning.

Preliminaries 🍱

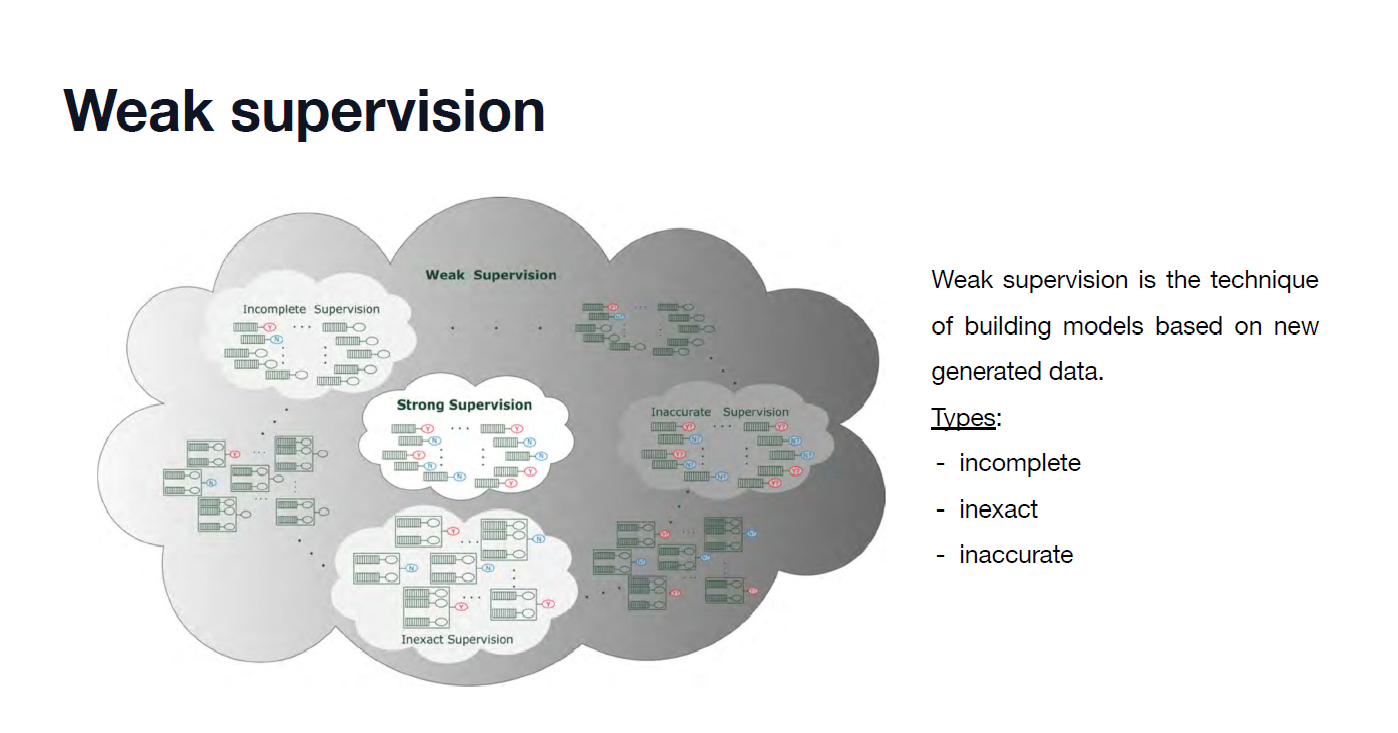

Weak Supervision

- definition

- proposed to ease the annotation bottleneck in training machine learning models.

- uses weak sources to automatically annotate the data

- drawback

- its annotations could be noisy (some annotations are incorrect); causing poor generalization

- solutions

- to re-weight the impact of examples in loss computation

- to train noise-robust models using KD

- equipped with meta-learning in case of being fragile

- to leverage the knowledge from pre-trained LLM

- datasets

- WRENCH

- WALNUT

Realistic Evaluation

Semi-supervised Learning

- often trains with a few hundred training examples while retaining thousands of validation samples for model selection

- 레이블 사람이 수동으로 달아주는 것 한계

- discard the validation set and use a fixed set of hyperparameters across datasets

- 일일히 각 데이터셋에 최적 하이퍼파라미터 조합을 사람이 찾음;

- prompt-based few-shot learning

- sensitive to prompt selection and requires additional data for prompt evaluation

- few-shot learning에 모순!

- prompt 예시: 다음 리뷰가 긍정적인지 부정적인지 판단하십시오:”

- sensitive to prompt selection and requires additional data for prompt evaluation

- recent work

- fine-grained model selection 생략

- Number of validation samples strictly controlled

의의

- To our knowledge, no similar work exists exploring the aforementioned problems in the context of weak supervision.

Challenges and Main Idea💣

C1) 기존 WS 방법들은 데이터 활용이 과장되었다.

Idea) 차라리 이것을 training dataset에 포함시켰으면 성능이 더 좋게 나오더라

C2) 기존 WS 방법들은 학습 방법에 편향이 존재한다.

Idea) 긍정적인 것에 부정적인 레이블을 강제하여 further tuning → 일반화 성능 향상

Problem Definition ❤️

Given a pre-trained model on \(D_w\) ~ \(\mathcal{D}_n\).

Return a model.

Such that it generalizes well on \(D_{test}\) ~ \(\mathcal{D}_c\).

Methodology 👀

Setup

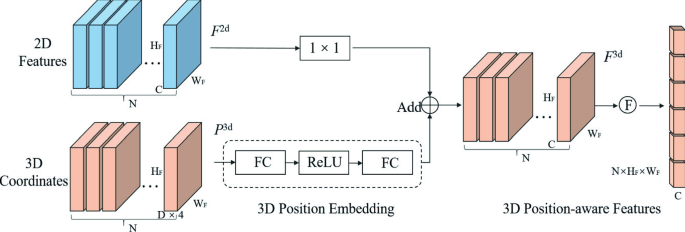

Formulation

- \(\mathcal{X}\): feature.

- \(\mathcal{Y}\): label space.

- \(\hat{y}_i\): labels obtained from weak labeling sources; could be different from the GT label \(y_i\).

- \(D={(x_i,y_i)}^N_{i=1}\).

- \(D_c\): clean data distribution.

- \(D_w\): weakly labeled dataset.

- \(\mathcal{D}_n\): noisy distribution.

- The goal of WSL algorithms is to obtain a model that generalizes well on \(D_{test} ∼ D_c\) despite being trained on \(D_w ∼ D_n\).

- baseline: \(RoBERTa-base\).

Datasets

- eight datasets covering different NLP tasks in English

WSL baselines

- \(FT_W\): standard fine-tuning approach for WSL.

- \(L2R\): meta-learning to determine the optimal weights for each noisy training sample.

- \(MLC\): meta-learning for the meta-model to correct the noisy labels.

- \(BOND\): noise-aware self-training framework designed for learning with weak annotations.

- \(COSINE\): self-training with contrastive regularization to improve noise robustness further.

y축(Relative performance improvement over weak labels):

\[G_{\alpha}={(P_{\alpha}-P_{WL}) \over P_{WL}}\]- \(P_{\alpha}\): the performance achieved by weak labels.

- \(P_{WL}\): a certain WSL method.

⇒ Without clean validation samples, existing WSL approaches do not work.

과연 정말 clean data가 없으면 WSL 성능이 안 좋을 수밖에 없나? .

Clean Data

⇒ a small amount of clean validation samples may be sufficient for current WSL methods to achieve good performance

⇒ the advantage of using WSL approaches vanishes when we have as few as 10 clean samples per class

Continuous Fine-tuning (CFT)

- CFT

- In the first phase, we apply WSL approaches on the weakly labeled training set, using the clean data for validation.

- In the second phase, we take the model trained on the weakly labeled data as a starting point and continue to train it on the clean data.

⇒ the net benefit of using sophisticated WSL approaches may be significantly overestimated and impractical for real-world use cases.

- 그냥 FT 한 것만으로도 기존 WSL 방법들 상당한 성능 향상 (even when # clean data = low)

- L2R의 Yelp 데이터셋 결과의 경우 CFT 이후 오히려 성능이 떨어진 모습인데, 이것은 L2R가 validation loss를 사용하여 파라미터를 업데이트하기 때문에 검증 샘플의 가치가 큰 영향을 주지 않았을지도..

⇒ Pre-training on more data clearly helps to overcome biases from weak labels.

- pre-training provides the model with an inductive bias to seek more general linguistic correlations instead of superficial correlations from the weak labels

⇒ contradictory samples play a more important role here and at least a minimum set of contradictory samples are required for CFT to be beneficial

Open Reivew 💗

NA

Discussion 🍟

NA

Major Takeaways 😃

NA

Conclusion ✨

Strength

- If a proposed WSL method requires extra clean data, such as for validation, then the simple FTW+CFT baseline should be included in evaluation to claim the real benefits gained by applying the method.

Weakness

- it may be possible to perform model selection by utilizing prior knowledge about the dataset

- For low-resource languages where no PLMs are available, training may not be that effective

- We have not extended our research to more diverse types of weak labels

Reference

NA

댓글남기기