[논문분석] Entropy-Driven Mixed-Precision Quantization for Deep Network Design

QAT 경우 overhead 줄일 수만 있다면, PTQ를 대체해도 좋을까?

한줄요약 ✔

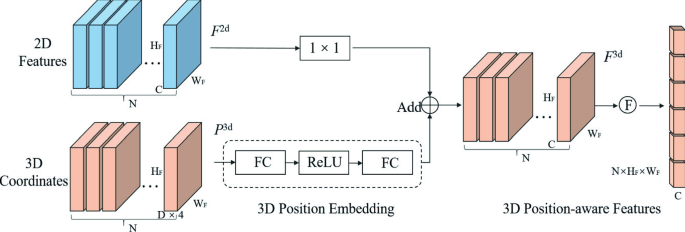

A one-stage solution that optimizes both the architecture and the corresponding quantization jointly and automatically. The key idea of our approach is to cast the joint architecture design and quantization as an Entropy Maximization process.

- Its representation capacity measured by entropy is maximized under the given computational budget.

Quantization Entropy Score (QE-Score) with calibrated initialization to measure the expressiveness of the system.Quantization Bits Refinementwithin evolution algorithm to adjust mixed-precision quantization.

- Each layer is assigned with a proper quantization precision.

- The

Entropy-based ranking strategyof mixed-precision quantization networks.

- The

- The overall design loop can be made on the CPU; no GPU is required.

Introduction 🙌

Why Need Quantization?

- Most IoT devices have very limited on-chip memory.

- Deploying deep CNN on Internet-of-Things (IoT) devices is challenging due to the limited computational resources, such as limited SRAM memory and Flash storage.

The key is to control the peak memory during inference.

Trends in Traditional Lightweight CNN

(1)Re-design a small network for IoT devices, then (2)compress the network size by mixed-precision quantization.

Limitations

The incoherence of such a two-stage design procedure leads to the inadequate utilization of resources, therefore producing sub-optimal models within tight resource requirements for IoT devices.

Related Work 😉

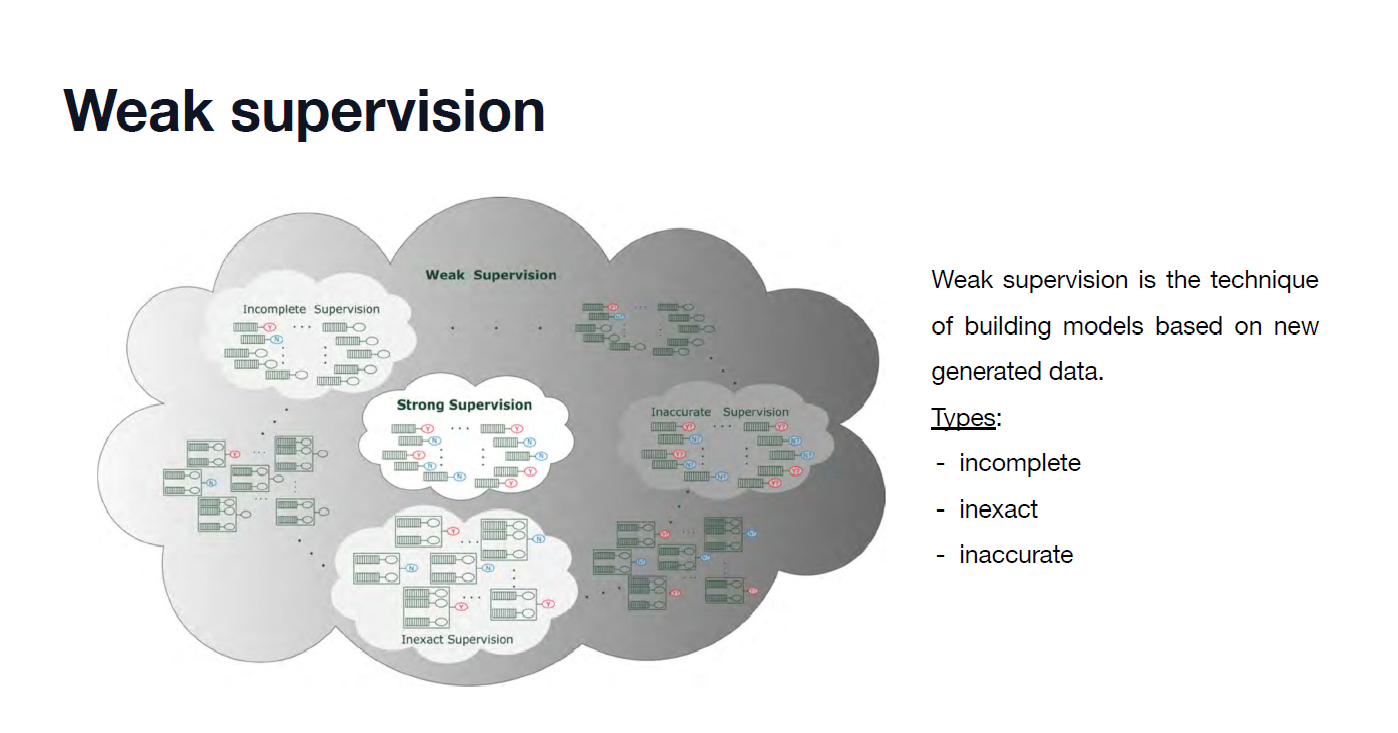

Training-free NAS methods

- Accelerates the progress of the model design using a proxy mechanism instead of a training-based accuracy indicator.

Limitation:

- Still lacks key techniques for cooperating mixed-precision quantization.

Challenges and Main Idea💣

C1) Designing models under limited resources remains a challenging issue.

C2) Low-precision has a short range of expressible values, producing chronic accuracy degradation.

Idea) Build a training-free NAS on mixed-precision quantization for selected IoT devices.

댓글남기기