[논문분석] Railroad is not a Train: Saliency as Pseudo-pixel Supervision for Weakly Supervised Semantic Segmentation (CVPR 2021)

한줄요약 ✔

- WSSS 사용할 시 Image-level weak supervision의 한계.

- sparse object coverage

- inaccurate object boundary

- co-occurring pixels from non-target objects

- Explicit Pseudo-pixel Supervision (EPS): two weak supervision으로 pixel-level feedback을 얻는다.

localization map\(\rightarrow\) distingusih different objects.- CAM(Class Activation Map)으로 생성.

saliency map\(\rightarrow\) rich boundary information.- Saliency detection model으로 생성.

Preliminaries 🍱

- 일반적으로 WSSS의 전체적인 파이프라인은 two stage로 구성되어 있다.

- pseudo-mask 생성 (image classifier 이용).

- pseudo-mask를 GT로 사용하여 각 iteration마다 GT 갱신하며 recursive하게 segmentation model 학습.

Challenges and Main Idea💣

C1) sparse object coverage

C2) inaccurate object boundary

C3) co-occurring pixels from non-target objects

Idea) Explicit Pseudo-pixel Supervision (EPS) 제안

Problem Definition ❤️

Given a weak dataset \(\mathcal{D}\).

Return a model \(\mathcal{S}\).

Such that \(\mathcal{S}\) approximates the performance of its fully-supervised model \(\mathcal{T}\).

Proposed Method 🧿

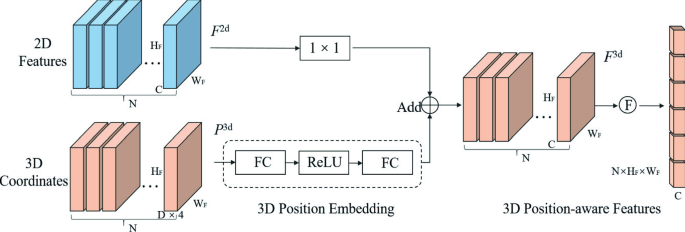

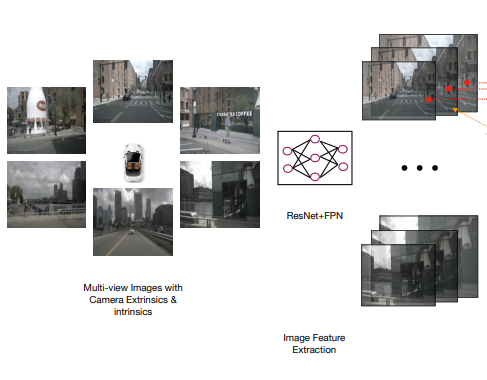

Model Architecture (EPS)

[Figure 2]

- Background를 포함해서 \(C+1\)개의 class로 분류하는 classifier로 \(C+1\)개의 localization map을 생성해서 saliency map과 비교한다.

- Foreground

- \(C\)개의 localization map을 합쳐서 foreground map을 생성하고, 이를 saliency map과 매칭시킨다. ⇒ improving boundaries of objects.

- Background

- background localization map과 saliency map의 바깥부분 \((1−M_s)\) 을 매칭시킨다. ⇒ mitigate the co-occuring pixels of non-target objects.

- 고양이와 개가 함께 나타나는 이미지를 고려해 봅시다. 여기서 고양이와 개가 대상(target)이고, 다른 객체들은 대상이 아닌(non-target) 객체입니다. 이 때, “co-occurring pixels of non-target objects”는 예를 들어 바닥, 벽, 나무, 가구 등의 픽셀들로 구성될 것입니다. 이 픽셀들은 여러 객체들과 함께 등장하며, 각 객체의 일부가 될 수 있지만, 그 자체로는 특정 객체를 나타내지는 않습니다.

- background localization map과 saliency map의 바깥부분 \((1−M_s)\) 을 매칭시킨다. ⇒ mitigate the co-occuring pixels of non-target objects.

Loss Function

\(\mathcal{L}_{total}=\mathcal{L}_{sal}+\mathcal{L}_{cls}\)

- \(\mathcal{L}_{sal}={1 \over H \cdot W} \Vert M_s - \hat{M}_s \Vert^2\) .

- \(M_s\): the off-the-shelf saliency detection model– PFAN [51] trained on DUTS dataset

- \(H \cdot W\)를 나누는 이유?

- normalization: 이미지의 크기가 클수록 loss 값도 커질 가능성이 있습니다. 이를 방지하기 위해 loss 값을 이미지의 크기로 나누어 normalize합니다.

- marked by red box/arrorw in Figure 2.

- the sum of pixel-wise differences between our estimated saliency map and an actual saliency map.

- involved in updating the parameters of \(C + 1\) classes, including target objects and the background.

- \(\mathcal{L}_{cls}={-1 \over C} \Sigma^C_{i=1} y_i log \sigma (\hat{y_i}) + (1-y_i) log (1-\sigma (\hat{y_i}))\) .

- \(\sigma\): sigmoid function.

- 이진 교차 엔트로피 손실(Binary Cross-Entropy Loss) 공식.

- marked by blue box/arrorw in Figure 2.

- only evaluates the label prediction for \(C\) classes, excluding the background class.

- the gradient from \(\mathcal{L}_{cls}\) does not flow into the background class.

Joint Training

- By jointly training the two objectives, we can synergize the localization map and the saliency map with complementary information

- we observe that noisy and missing information of each other is complemented via our joint training strategy, as illustrated in Figure 3

- Missing objects: \((c)\)에서 놓친 의자와 보트 객체를 \((d)\)에서는 잘 segment하는 모습.

- noise 제거: \((c)\)에 존재하는 비행기의 contrail(연기) 같은 것들이 \((d)\)에서는 제거된 모습.

\(\hat{M}_s=\lambda M_{fg} + (1-\lambda)(1-M_{bg})\)

- \(\hat{M}_s\): estimated saliency map.

- \(M_s\): actual saliency map.

- \(M_{fg}\): foreground saliency map.

- \(M_{fg}=\Sigma^C_{i=1} y_i \cdot M_i \cdot \mathbb{1} [ \mathcal{O} (M_i,M_s) > \tau]\) .

- \(\mathcal{O}(M_i,M_s)\): is the function to compute the overlapping ratio between \(M_i\) and \(M_s\).

- \(M_i\): \(i\)-th localization map.

- Assigned to the foreground if \(M_i\) is overlapped with the saliency map more than \(\tau%\)%, otherwise the background.

- \(y_i \in \mathbb{R}^C\): binary image-level label \([0 or 1]\).

- 모델 예측이 객체가 존재하는 경우에 대해서만 각 객체에 대한 saliency map을 합하여 최종 foreground saliency map을 구성한다.

- \(M_{fg}=\Sigma^C_{i=1} y_i \cdot M_i \cdot \mathbb{1} [ \mathcal{O} (M_i,M_s) > \tau]\) .

- \(M_{bg}\): background saliency map.

- \(M_{bg}=\Sigma^C_{i=1} y_i \cdot M_i \cdot \mathbb{1} [ \mathcal{O} (M_i,M_s) <= \tau] + M_{C+1}\) .

- \(\lambda \in [0,1]\): a hyperparameter to adjust a weighted sum of the foreground map and the inversion of the background map.

Experiment 👀

Setup

Datasets

- PASCAL VOC 2012 and MS COCO 2014

- augmented training set with 10,582 images

- Augmentation 빼면 성능 어떤가??

Baseline

- ResNet38 pre-trained on ImageNet

Boundary Mismatch Problem

Co-occurrence Problem

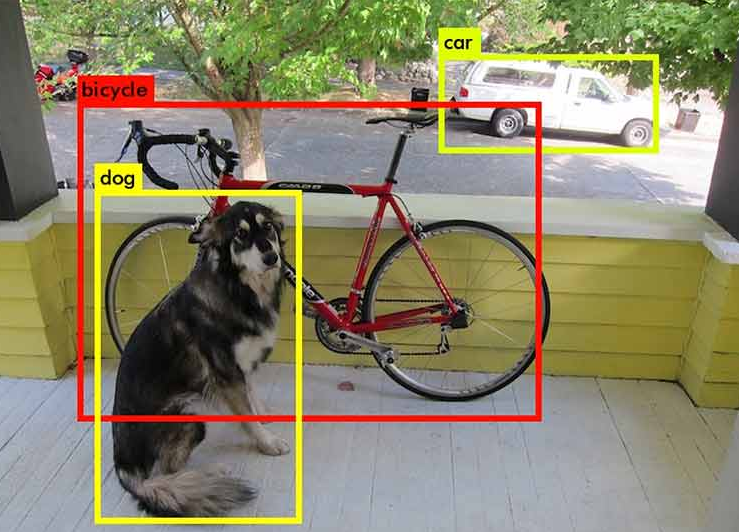

- What is it?

- Some background classes frequently appear with target objects in PASCAL VOC 2012

- Dataset:

PASCAL-CONTEXTdataset.- provides pixel-level annotations for a whole scene (e.g., water and railroad).

- Evaluation:

- We choose three co-occurring pairs; boat with water, train with railroad, and train with platform. We compare IoU for the target class and the confusion ratio \(m_{k,c}={FP_{k,c} \over TP_c}\) between a target class and its coincident class.

- \(FP_{k,c}\): the number of pixels mis-classified as the target class \(c\) for the coincident class \(k\).

- \(TP_c\): the number of true-positive pixels for the target class \(c\).

- \(k\): the coincident class.

- \(c\): the target class.

SEAM는 특이하게 self-supervised training을 기반으로 하기 때문에 서로 다른 객체에 대해 겹치는 픽셀들에 잘못된 target object 레이블을 할당하고 이것을 정답 레이블로 학습에 활용하여 더 confusion ratio가 더 높게 측정되는 모습이다.

Map Selection Strategies

- Naive strategy:

- The foreground map is the union of all object localization maps; the background map equals the localization map of the background class.

- Pre-defined class strategy:

- We follow the naive strategy with the following exceptions. The localization maps of several pre-determined classes (e.g., sofa, chair, and dining table) are assigned to the background map (i.e., pre-defined class strategy)

- 특정 객체들이 target objects가 아님을 알고 미리 배경으로 지정.

- We follow the naive strategy with the following exceptions. The localization maps of several pre-determined classes (e.g., sofa, chair, and dining table) are assigned to the background map (i.e., pre-defined class strategy)

- Our adaptive strategy:

- 상기 EPS 내용 참조.

⇒ Our adaptive 가 가장 IoU 수치가 높고, 이는 해당 전략이 target object를 가장 잘 표현함을 의미한다.

Comparison with state-of-the-arts

Accuracy of pseudo-masks

Accuracy of segmentation maps

Effect of saliency detection models

- Notably, our EPS using the unsupervised saliency model outperforms all existing methods using the supervised saliency model

Open Reivew 💗

NA

Discussion 🍟

NA

Major Takeaways 😃

NA

Conclusion ✨

- We propose a novel weakly supervised segmentation framework, namely explicit pseudo-pixel supervision (EPS).

Reference

NA

댓글남기기